|

Written by Jeremy Rozen |

| September 25, 2012 |

|

WAN optimization has long been a mechanism for reducing bandwidth cost and moving more data over a network faster. However, TRAC's upcoming WAN Optimization Spectrum report shows that organizations no longer see WAN optimization deployments as simply a way to better manage capacity of their traditional wide area networks. Five key findings of this state-of-the market study include:

User experience is becoming the key performance indicator for evaluating WAN performance

TRAC's recent survey of 332 users who implemented WAN Optimization techniques showed that the quality of end-user experience is the leading performance indicator for evaluating WAN performance by a significant margin (53% of respondents, compared to 11% for the next highest measure).

This finding indicates that organizations are discovering that traditional metrics, such as network throughput or data reduction, are no longer serving as an overall indicator of how the solution provides value for an end-user. As a result, network administrators cannot focus exclusively on optimization or acceleration capabilities anymore and need to ensure that they have full visibility and control when it comes to application traffic over the WAN.

WAN optimization is not a single market

TRAC's research identified 5 sub-markets for WAN optimization. These are: - Datacenter-to-Branch

- Datacenter-to-Datacenter

- Delivery to mobile Users

- Cloud-to-Branch

- Visibility and Control/Bandwidth Management

Each of these markets have different dynamics, growth rates and unique buyer requirements. As a result, WAN optimization technology vendors fare differently when they are evaluated in each of these areas, while some of them only compete in one or two markets.

Emergence of cloud and mobility is changing the definition of Wide Area Network

Users are increasingly accessing business critical applications on mobile devices, including smartphones, laptops and tablets, from a variety of locations, while expecting optimal quality of user experience. However, traditional WAN optimization solutions were not designed to support these types of use cases. TRAC's WAN Optimization Spectrum study shows that software solutions for optimizing application delivery to mobile devices is the number one technology capability that organizations are looking to deploy in the next 12 months. Currently, only a few vendors are able to provide this type of capability.

TRAC's Spectrum study shows that one of the key reasons organizations are replacing WAN optimization solutions with products from other vendors is ineffectiveness of their existing solutions when deployed in Cloud environments.

As these trends progress, it is becoming increasingly difficult to determine the “edge” of the WAN, the spot where the company’s conceptual framework ends and other vendors or users begin. A Wide Area Network, which previously may have only encompassed the company’s physical locations, can now be thought of as including mobile users, cloud providers and potentially anyone else the WAN optimization technique needs to extend to. The definition of a Wide Area Network is changing and organizations are increasingly finding that traditional approaches for WAN optimization are becoming less effective.

Emergence of new deployment methods

TRAC's Spectrum Study shows that while physical appliances are still the most predominant method for deploying WAN optimization solutions, other methods of implementation, including software and Cloud services, are showing strong growth rates.

TRAC's research shows that organizations who are deploying WAN optimization are still not optimizing 54% of their remote locations. This comes as a result of challenges associated with deploying physical appliances and the inability to cost justify these technology purchases. The innovations in the WAN optimization space and new deployment methods have created a number of new use cases for deploying these solutions and expanded an addressable market for these vendors.

WAN optimization technologies are becoming one of the key enablers of major IT projects

Organizations are reporting that some of the key reasons they are deploying (or replacing) WAN solutions are deployments of technologies such as video, mobility, virtualization and Cloud. Additionally, organizations are increasingly finding WAN optimization technologies effective when dealing with emerging IT management challenges, such as Big Data. The value of these technologies and IT projects heavily relies on network performance and the quality of user experience. For that reason, organizations are seeing WAN optimization capabilities as integral elements when ensuring that they get the most out of the investments that they are making in modernizing their IT infrastructure and bringing their IT services closer to end-users.

These trends are significantly impacting how WAN optimization solutions are being evaluated and deployed. Additionally they are changing the role that WAN optimization technologies play in end-user organizations. The addressable market for WAN optimization solutions is increasing, and the impact of these solutions are spreading across different areas of IT and business and benefiting more users. In addition, WAN optimization has been elevated to a more strategic level in the CIO’s viewpoint. What was once seen mostly as a cost-saving measure now has real strategic impact on the entire organization and addresses some of the key items on CIOs’ agendas. In order to take advantage of this opportunity, WAN optimization vendors need to prove that they are willing to venture out of their comfort zones, innovate and build capabilities for effectively addressing new use cases for WAN optimization.

|

|

Written by Bojan Simic |

| August 03, 2011 |

|

On July 6th, Compuware announced that it acquired dynaTrace Software for $256 million in cash. dynaTrace provides solutions for tracing and capturing application transactions, code level application diagnostics and real-user experience monitoring (RUM). This company has particular strengths in monitoring Java applications and its products are used in both pre-production and production.

Analyzing drivers behind this acquisition uncovers some of the larger trends in the APM market and brings to light new requirements for creating competitive advantages in this space.

Compuware's position prior to the acquisition

Prior to the acquisition, Compuware's APM portfolio consisted of the Vantage product line and Gomez products acquired in October of 2009. The Gomez acquisition enabled Compuware to offer arguably the broadest range of application performance management capabilities in the market. These capabilities include both synthetic and real user monitoring, monitoring from both inside and outside of the corporate firewall, network, database, server and application component monitoring, as well as monitoring last mile, cloud and mobile performance. Additionally, Compuware had an aggressive agenda for integrating Gomez and Vantage products and the company was able to follow through on this plan and allow their customers to leverage the capabilities of both of these technologies in an integrated way.

It looks like Compuware was in good shape when competing in the APM market prior to this acquisition. So why did they feel like they needed to spend $256 million on purchasing a company with $26 million in TTM in revenues?

Completeness vs. effectiveness of APM product portfolios

Competitive positioning of many APM vendors have been largely focused on the completeness of their product portfolios and making sure that they have all of the major boxes checked. User experience management, transaction monitoring, monitoring application components or parts of the delivery infrastructure are just some of the areas that vendors are looking to cover when trying to position themselves as complete APM solutions. However, the fact that Compuware had user experience monitoring, transaction and Java monitoring boxes checked and still decided to spend more than half a billion dollars on acquiring Gomez and dynaTrace, user experience monitoring and transaction tracing providers, is proof that gaining true competitive advantage in the APM market is becoming a more complex task.

Preliminary findings of TRAC's APM survey show that end-user organizations are evaluating APM solutions predominantly based on effectiveness in their specific use cases and specific pain points that they are trying to address. Even though organizations see the value in managing multiple aspects of application performance management through an integrated solution, what matters to them more than the completeness of APM portfolios are capabilities that vendors are providing within each of these segments. Also, solutions that are focusing on varied individual segments of APM are based on different underlining technologies and approaches for collecting the data, which in many cases determines their effectiveness in different usage scenarios. Based on that, user experience monitoring solutions can be segmented into 2-3 separate sub-markets and the same goes for transaction monitoring products. The dynaTrace acquisition enables Compuware to add strong capabilities in the areas of the APM that the company was not focusing on, such as pre-production monitoring. More importantly, the acquisition allows Compuware to strengthen its offerings in areas that were included in the company's pre-acquisition portfolio, such as transaction tracing, deep dive monitoring and RUM. Even though the acquisition expands the array of capabilities that will allow the company to be more effective in a broader range of APM use cases, the real story of the acquisition, from a technology perspective, is that it allows Compuware to gain new strengths across different functional segments of APM.

The best way to describe the APM vendor landscape is as a set of different micro landscapes that are being defined by the types of applications and technology environments that organizations are looking to manage or blind spots that they are trying to close. The acquisition enables Compuware to significantly increase the number of usage scenarios where their solutions are able to effectively address application performance challenges.

Impact on competitors

This move can impact other APM vendors both short and long term. Short term, it can be expected that many vendors will try to take advantage of the fact that Compuware just acquired a technology that significantly overlaps with their Vantage technology and go after current Vantage customers. The same day the acquisition was made public, Compuware announced that their Vantage Analyzer product is being replaced and, due to end-user concerns about the support that Compuware will be providing for Vantage products going forward, this strategy could result in new customer wins for some vendors.

Long term, this acquisition could have a more significant impact on the APM market and cause additional consolidation and, more importantly, a shift in how requirements for effective application performance management markets are defined. Some of the other traditional leaders in the APM market that have all of the major boxes checked when it comes to completeness of their product portfolios may also find that they need to take a good look into each of these boxes and see if their capabilities are really well aligned with the key requirements for managing today's complex application environments. Those vendors that come to the same conclusion as Compuware did, after evaluating their portfolios, will be facing a "build or buy" quandary which, given how fast the requirements of this market are changing and what technology backgrounds their solutions are, should not be a tough dilemma. The real question is: How many of the vendors who are left in this market can be good acquisition targets? Even though new vendors are constantly entering this market, the list of acquisition targets that can help create true competitive advantages across a broad range of deployment scenarios is still fairly short.

|

|

Written by Jeffrey Hill |

| May 26, 2011 |

|

TRAC's article from October, 2010, "BI Becoming the Key Enabler for IT Performance Management" talked about how the complexity of managing IT performance is driving the need for new analytics and reporting capabilities for IT management solutions. These capabilities are especially important when it comes to monitoring network and application performance – a deeper and wider view of the application infrastructure is vital as companies add more complex and dynamic IT services. Many organizations that use several APM solutions at the same time report that they still do not have enough visibility into application performance and, as a result, struggle with troubleshooting and repairing performance issues. The relevance of application performance data largely depends on how well they fit an organization's particular use case and thus the metrics collected "out of the box" by APM solutions may not fit the problems that they are trying to solve.

ExtraHop Networks recently announced version 3.5 of their Application Delivery Assurance system, a key part of which is a technology called Application Inspection Triggers. This capability allows organizations to define metrics to be captured at network speeds through the use of scripts that are customized to solve a particular problem. These metrics can be uniquely tailored to meet the specific needs of each organization or IT department with custom alerts and reports suited to the metrics being collected. The granularity of the collection process can be as fine as required to isolate problems, even down to collecting information about individual users, files or program statements.

What makes this announcement even more interesting is the core technology that ExtraHop uses to monitor network and application performance. ExtraHop's solutions are an interesting combination of capabilities for both network and application performance monitoring – the company provides appliances for passive monitoring of network traffic, but is different than traditional network visibility tools as it provides increased visibility into application performance, as well as the ability to monitor the impact of different infrastructure elements, such as storage or database, on application performance. The depth of information that ExtraHop's appliances capture and the fact that this information is captured in real-time make Application Inspection Triggers even more effective because of the flexibility of the metrics that organization are able to capture.

From an industry standpoint, there is no shortage of vendors that monitor application performance, but the real question is what capabilities really make the difference between seeing wider and deeper into the application infrastructure and having the ability to solve performance problems. In addition to ExtraHop, other vendors are realizing that what really makes the difference in performance management is how the data that is collected is being processed and presented to IT decision makers. A good example of a company that understands the value of making data relevant is SL Corporation, whose RTView product enables organizations to get more out of the data collected by their APM tools by aggregating it and providing an additional level of analysis and reporting. Another example is Prelert, who provides a solution that leverages data collected by other APM tools and applies a self-learning technology to improve the ability to troubleshoot and repair application performance issues.

The well-controlled and monitored application infrastructure that existed ten years ago has been replaced by a dynamic and sometimes unpredictable mixture of local servers, virtualized applications and the cloud. In order to be able to effectively manage these types of environments, organizations need to be able to do more than just monitor packets, servers or application components and ensure that performance data that is being delivered to IT staff is truly relevant. One of the key value propositions of business intelligence - the ability to deliver the right information to the right people at the right time -- is becoming a key differentiator for APM vendors and ExtraHop's recent announcement is a significant step in that direction.

|

|

Written by Jeffrey Hill |

| May 20, 2011 |

|

On April 4th, 2011, SmartBear Software enhanced its traditional focus on application testing and QA by acquiring AlertSite, a developer of application web performance monitoring and management tools. SmartBear's products emphasize the importance of managing the entire application lifecycle from code review to application profiling and automated testing for QA. By way of contrast, AlertSite is focused on synthetic monitoring and testing of web site performance. The product lines of both companies are complementary and together form a suite of applications that monitor and manage applications from inception to deployment and actual use.

This is a good move for SmartBear for several reasons. To begin, both companies have sizeable communities of users: SmartBear has a development and QA community of more than 100,000 users, while AlertSite's DejaClick has had more than 250,000 downloads, providing an opportunity to cross-sell and up-sell across both communities. While AlertSite's approach for monitoring Web performance has been resonating well with their customers, the company lacked resources to truly challenge companies that have been leaders in this space such as Gomez and Keynote Systems. Through this acquisition, AlertSite gains additional marketing muscle and research and development resources.

However, when you take a closer look into the product portfolios and strategies of these vendors, it becomes apparent that some of the key dynamics in the application management market are influencing this acquisition.

-- The concept of a lifecycle of application performance management has been present in the market for some time and has been accepted mostly by vendors who provide solutions for application testing in pre-production. Vendors are becoming increasingly aware of the importance of providing solutions which identify how the lifecycle of application management affects both end-user experience and business goals.

-- The continuing growth of cloud services, especially the emergence of hybrid clouds, is driving the need for application performance monitoring solutions that are agnostic about where applications are hosted. Companies are recognizing that the most effective strategy for the deployment of testing and monitoring solutions is to move monitoring points closer to the end-user. The fact that AlertSite's DejaClick solution is browser-based aligns well with this trend and its users have visibility into the quality of end-user experience, regardless of whether their applications are hosted in the cloud or in an on-premise managed datacenter

-- The importance of this acquisition to the industry should not be overlooked, especially in the context of other recent events such as Compuware's 2009 acquisition of Gomez or Neustar's acquisition of Webmetrics in 2008. All of these acquisitions signal the growing importance of monitoring application performance and the end-user experience from outside of corporate firewall which is a very valuable capability for managing cloud environments. They also reinforce the need for bigger players in the market to either develop these capabilities in-house, which is a very time-consuming and costly proposition given how these solutions are deployed, or to acquire them in a market where the number of available companies is diminishing with somewhat alarming swiftness. For companies looking to spend some money to acquire this capability, Keynote and Catchpoint are among the few remaining targets.

This acquisition gives SmartBear opportunities that go well beyond extending their current community and creating new channels for AlertSite's products. SmartBear now has an opportunity to capitalize on market trends that call for a more user-centric approach throughout the entire lifecycle of application management. Taking advantage of these trends will require tighter integration with AlertSite's product portfolio, as well as building product capabilities that enhance their appeal to companies that are adopting a DevOps methodology.

Web performance has become critical to conducting business – nothing discourages customers or potential customers faster than poor website performance. However, TRAC's recent research shows that issues with the quality of end-user experience for websites occur 10 times more frequently than website outages. For that reason, organizations report losing twice as much revenue due to issues with end-user experience as they do for issues with site availability, proof that end-user experience should be at the core of application performance management initiatives. Including user experience in the entire lifecycle of application management should lead to better customer retention and increased revenue.

|

|

Written by Bojan Simic |

| March 09, 2011 |

|

TRAC's recent article "Application Performance Management - The Journey of a Technology Label" talked about how diluted the APM space has became and that it is getting more difficult for end-user organizations to distinguish between vendors' marketing messaging and the true value that these solutions can deliver. Sometimes, we would get vendor requests for a call to discuss their latest customer win, selection process that an end-user organization went though and a type of IT environment that they are managing. The most impressive part about some of these calls is not necessarily how the vendor won that deal, but how some vendors they were competing against even got to be considered in that type of usage scenario.

Recently, we had two application monitoring vendors referring to the same competitor as a company that is "having more success against us than ever before" and "gets involved in some of our deals, but we very rarely see them win". On the other hand, when talking with end-users about the same product, the feedback that we get can range anywhere from them raving about the product to complaining about all of the "blind spots" that it leaves. So, is the solution that this vendor provides good or bad? It is actually very good when used for addressing problems that it was designed to address.

The application performance monitoring market has been growing very fast and some vendors are trying to grow their presence by going after nearly any environment that lacks visibility into application performance. This typically results in them sometimes getting invited to the table in usage scenarios where they have very little chance of winning, while it makes it more difficult for companies that could actually add value in those environments to fight through marketing noise and even be considered. The reality is that even though there are a lot of vendors that play in this space, due to new market dynamics, "shortlists" of vendors that can be truly effective in certain usage scenarios are sometimes very short.

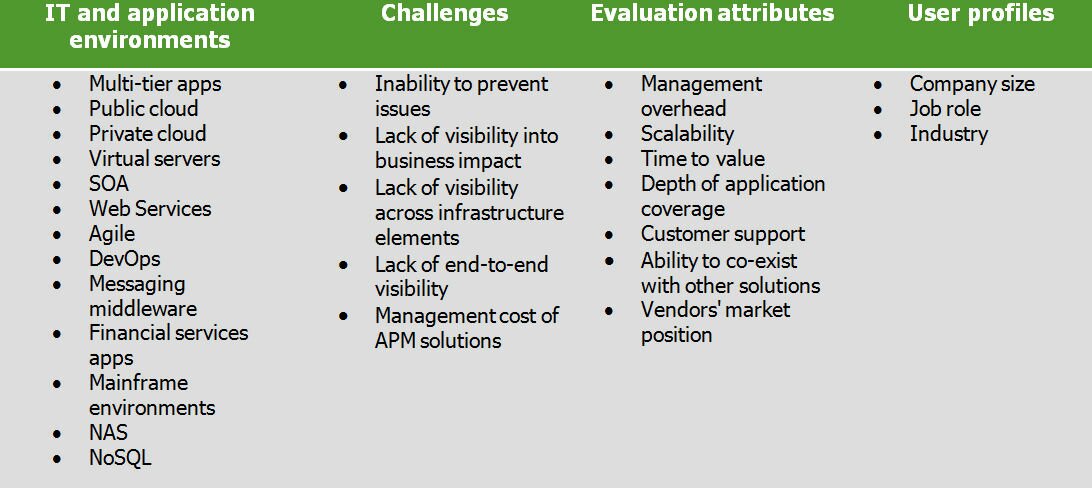

To shed light on this issue, we have been interviewing end-user organizations and trying to find out what they really care about when evaluating technology vendors. The findings came down to three key areas: 1) fit for their IT environment; 2) problems that they are trying to solve; 3) product attributes that they are looking for. We also found significant differences between preferences of different job roles and companies that fall into different categories based on the size of the organization and the industry sector that they compete in. The list of individual criteria that impacts their selection processes was fairly long and SOME of them are listed below.

Our goal was to publish a study that would help end-user organizations make the correct decisions about selecting solutions for monitoring application performance that would be the best fit for their needs. However, the variety of their goals, selection criteria, types of IT environments and company profiles created a challenge of coming up with recommendations that would be truly relevant and actionable and not just a superficial overview of the market.

We realized that the complexity of the application performance space calls for a new report format and the idea for it came straight from our research. Many organizations are reporting that a Web browser is becoming the center of their IT performance efforts, as technology initiatives such as SaaS, IaaS and PaaS are changing how computing resources are being used and information is delivered to business users. As a result, we built a Web application for publishing the research findings that will allow end-user organizations to access information they really care about in a more interactive fashion.

With all due respect to good old PDF, there is too much complexity in the application performance monitoring market to fit research findings in a "one size fits all" publishing format and still make them actionable and relevant for different profiles of end-users. We have been advocating that information only has value if it is actionable and relevant so now it is time for us to "walk the walk".

If you are an end-user of IT performance technologies, we are interested in hearing your thoughts about the new report format before it gets published. TRAC will be hosting a series of demo presentations of the new report format and If you are interested in attending, please send a note to

|

|

Written by Bojan Simic |

| December 20, 2010 |

|

The market for Web performance solutions has experienced significant changes in 2010 and many of the trends that have been driving new dynamics in this market are expected to be even more accelerated in 2011.

Traditionally, these types of solutions have been predominantly deployed by large Web properties (media, entertainment, social networks, etc.) and organizations that are either using their websites to generate revenues or rely on Web portals to share information internally. However, changes in the way that business users are accessing corporate data are causing Web applications to became more than just revenue generating, branding or collaboration tools. In 2010 we have seen applications that are being accessed through Web browsers and delivered over public Internet become more critical beyond business-to-customer (B2C) environments, as organizations are increasingly using these applications to communicate with their employees and partners.

End-user organizations who participated in TRAC’s recent survey reported that they anticipate 11% of overall network traffic that is currently being delivered over corporate private networks to be delivered over public Internet in the next 12 months. Deployments of SaaS applications, more organizations considering and deploying Infrastructure-as-a-Service (IaaS) models and looking to achieve cost savings by leveraging advantages of public Internet are increasing the importance of managing Web performance. As organizations are becoming more dependent on the performance of Web applications they are also realizing that some of the same trends that are driving increases in the importance of these applications are also posing new performance management challenges.

New challenges of managing Web performance are forcing both technology vendors and end-user organizations to respond, which in turn is driving new dynamics in this market. Based on TRAC’s recent research, we identified ten areas that are significantly impacting how different flavors on Web performance management solutions are being deployed and managed, as well as some of the capabilities that are becoming more important in this market.

|

|

Written by Bojan Simic |

| November 29, 2010 |

|

Industry analysts tend to classify vendors into technology "buckets" and create "labels" for each of them, as that makes it easier to compare products, capture key trends and provide context around problems that these products are addressing. This method also resonates with some technology marketers, as it allows them to partially benefit from promotions that other vendors and media are conducting around a label of a technology bucket their product was put into.

The term "application performance management" (APM) has been one of the hottest technology "labels" over the last few years. Performance of enterprise applications impacts nearly all of the key business goals, and it shouldn't come as a surprise that technology solutions for managing performance of these applications has been very high on IT agendas. With that said, it should be even less of a surprise that technology vendors, who are involved in managing the delivery of applications to business users in any way, realized this opportunity and started calling themselves "APM vendors". However, every "hot" industry term has an expiration date attached to it and sometimes it doesn't take long for a company to go from being one of the biggest promoters of an industry term to getting to the point where it doesn't even want to be associated with it.

Back in 2008, there were more than 50 technology vendors that used the term APM to position products that they provide and that number is now down to less than 30. So, had these 20+ companies gone out of business or completely changed their product portfolios? No, but they had realized that the term APM got diluted and that it is in their best interest to separate themselves from technologies that address the same problem as they do, only from a different perspective.

Being thrown into the same technology bucket with companies that are addressing a similar problem from a different perspective could be a major challenge for many technology companies. Organizations that are in this position typically have two options: 1) wait until the market matures to the point when it becomes obvious that their solution is significantly different than other products in the same "bucket", or 2) coin a new term to describe a category in which their product belongs, promote the heck out of it and hope that it will become an industry accepted term. It took a combination of these two approaches to somewhat change the boundaries of the APM "bucket". That resulted in more market awareness about the differences between two groups of products that are also addressing challenges of managing application performance, but doing it from different perspectives: end-user experience monitoring and business transaction management (BTM).

The increased interest of end-user organizations in having visibility into how their applications are performing, not only from the perspective of their IT departments but from the perspective of business users, resulted in more market awareness about the role that end-user experience monitoring solutions are playing in managing application performance. The market matured enough to become more aware of the fact that different flavors of technologies for monitoring the quality of end-user experience, such as those provided by Aternity, Knoa Software, Coradiant or AlertSite, do not compete against, but complement vendors such as OPNET, OpTier or Quest's Foglight.

On the other side, vendors that specialize in managing application performance from a business transaction perspective also found a way to raise awareness about the differences between their solutions and many other APM products. This resulted in an increased adoption of the term BTM when describing capabilities of these solutions. These solutions are taking a different approach when addressing issues with application performance, as compared to some other APM vendors, and enable organizations to monitor the performance of applications across an entire transaction flow. Some of the vendors that fall in this group include OpTier, Nastel, INETCO, Correlsense, Precise Software, dynaTrace and AmberPoint (acquired by Oracle).

|

|

Written by Bojan Simic |

| November 05, 2010 |

|

On October 22, Riverbed acquired CACE Technologies, a network monitoring vendor. CACE provides solutions for network traffic capture and analysis and it is also a sponsor of Wireshark project, an open-source network monitoring tool that is being deployed by millions of end-users. CACE's products will become a part of Riverbed's Cascade business unit and Riverbed stated that Wireshark will remain a free tool.

This acquisition can impact Riverbed's market position in three key areas.

1) WAN Optimization

Riverbed has been experiencing a lot of success in the WAN optimization market and many of their competitors find it difficult to compete with data reduction and the acceleration capabilities of Riverbed's Steelhead technology. Even though WAN acceleration techniques alone are enabling organizations to improve application performance over the WAN, due to complexity of WAN traffic, organizations are increasingly looking for WAN optimization solutions that couple acceleration techniques with capabilities for visibility into WAN performance.

Riverbed responded to this trend by acquiring a network visibility vendor, Mazu Networks, in January of 2009. Even though the Mazu acquisition allowed Riverbed to add advanced network monitoring capabilities to its portfolio, it didn't significantly impact Riverbed's position in the WAN optimization market, as Mazu products were provided as a separate product offering from Riverbed's WAN optimization gear through the Cascade business unit. Riverbed did take several steps to tighten integration with Cascade solutions, but it hasn't provided both acceleration and visibility capabilities on a single platform, which is the approach that vendors, such as Exinda, Expand Networks, Ipanema Technologies and Silver Peak, have being using to create competitive advantages.

The acquisition allows Riverbed to enhance visibility capabilities of their WAN optimization solution by integrating CACE Pilot with their Steelhead appliances. CACE Pilot is a solution for analyzing network performance and using it to process data that is being collected by Steelhead appliances will enable Riverbed to provide WAN acceleration and visibility capabilities through the same platform.

|

|

Written by Bojan Simic |

| October 25, 2010 |

|

Network emulation technology was originally designed to help network managers, IT operations teams and developers to create lab environments that simulate their actual network conditions for testing application performance in pre-production. These solutions allow organizations to use historic network performance data to create virtual testing models that generate information about expected levels of performance and help eliminate network performance bottlenecks before applications go into production. Providers of this type of technology include: Anue Systems, Apposite Technologies, iTrinegy and Shunra Software, while OPNET provides a somewhat similar solution for building network models. End-user organizations are reporting that the accuracy of simulated network environments created by these technologies is around 95% and that, somewhat quickly, these solutions pay for themselves. Some organizations that I have had the chance to speak with reported that their network emulation products have paid for themselves four times over in just labor costs alone, as they significantly reduced the number of incidents with application performance in production that IT teams had to deal with.

Network emulation technologies have been around for a while and even though they provide some measurable business benefits for organizations, they never reached wide-spread adoption in the enterprise. One of the main reasons for this is that many organizations are interested in using these solutions only when they are planning new technology rollouts or making changes to existing applications and, therefore, larger organizations that are building a lot of custom applications and have new rollouts almost on a weekly basis have been the main beneficiaries of these solutions. On the other hand, organizations that have only a few new technology rollouts per year have not been able to justify making investments in network emulation solutions. Vendors in this space have been trying to make their solutions more accessible to end-users by providing their capabilities as a managed service or adjusting pricing models, but the key obstacles for the wide-spread adoption of this technology still remains.

|

|

Written by Bojan Simic |

| October 12, 2010 |

|

Preliminary findings of TRAC’s end-user survey show that organizations are still struggling to gain full visibility into their IT services and infrastructure. Many of the organizations surveyed are reporting that, even though they made significant investments in new IT monitoring and management tools and increased the amount of performance data that they have on hand, they are still not seeing any significant improvements in key performance indicators (KPI). More than half of these organizations are reporting that the performance data they are collecting is not actionable and many of them find it difficult to prevent performance issues before end-users are impacted.

Organizations have been asking for more visibility into IT performance and many vendors responded by providing more monitoring points, better network taps, new modules for seeing deeper into parts of the infrastructure or expanding monitoring into new areas. These product enhancements did help organizations see deeper and wider, but didn’t necessarily help them have more visibility. Now that organizations have all of this data on hand, the challenge becomes: how to make the most sense of it and turn this data into actionable information?

Some vendors realized this opportunity and designed management solutions for correlating, normalizing and providing the right context for the data that organizations have access to.

One of the examples of this type of vendor includes ASG Software. The company’s Enterprise Automation Management Suite (EAMS) sits on top of multiple tools for monitoring IT infrastructure and services and enables companies to put this data into the right context so they can identify and resolve performance issues. Also, VKernel is a virtualization management vendor that leverages data collected mostly from VMware management tools and applies a set of algorithms that allow organizations to conduct a “what-if analysis” for capacity planning, optimization and inventory management in virtualized environments. Another example of a vendor who is capitalizing on this opportunity is Netuitive. The company’s self-learning technology provides dynamic performance thresholds and performance data correlation capabilities that allow organizations to leverage data from other monitoring tools and constantly adjust to changes in IT environments, while reducing management overhead. Also, Monolith Software is providing a platform for correlating and normalizing information about different aspects of IT management, such as asset, fault or performance management.

|

|

|

Written by Bojan Simic |

| September 21, 2010 |

|

- Aryaka Networks Launches a Cloud Solution for Application Delivery to Remote Sites -

Today, Aryaka Networks came out of stealth mode and announced its cloud solution for WAN optimization. The solution is based on a number of globally distributed points of presence for WAN optimization that sit on large carrier networks around the world. Aryaka allows organizations to deploy WAN optimization capabilities by using a SaaS platform and set up WAN optimization techniques in only a few clicks, without having to deploy any additional hardware.

The biggest area of change in the WAN optimization market over the last three years has been the delivery method of these solutions. The majority of announcements that have been made by WAN optimization vendors were centered around turning their hardware solutions into virtual appliances or enabling their products to better support managed WAN optimization services. This trend has been driven by the end-users’ request to simplify the management of WAN optimization solutions, reduce the total cost of ownership, support different network topologies and make these products more appealing for organizations that are deploying virtualization and cloud computing services. Aryaka’s solution takes this a step further and provides an innovative approach for addressing some of the key concerns that end-user organizations have about deploying WAN optimization solutions.

The trend of moving WAN optimization hardware out of the branch has been resonating with end-user organizations and a majority of WAN optimization vendors have been doing a good job of trying to adjust to it. However, Aryaka is not only taking WAN optimization solutions completely out of the branch, but also moving them outside of corporate firewalls, providing an almost CDN-like infrastructure for delivering these capabilities (which should not come as a surprise if you know that the founder of Aryaka was also a founder of Speedera Networks, a CDN company that was acquired by Akamai in 2005).

|

|

Written by Bojan Simic |

| September 15, 2010 |

|

Today, Keynote Systems and dynaTrace announced a strategic partnership that would allow end-users to leverage these two solutions in an integrated fashion. This is the second strategic partnership that Keynote has created in this space over the last two months, as they formed a similar type of relationship with OPNET Technologies that was announced on July 22. These partnerships might be confusing to some, as it might seem that these three companies are essentially doing the same thing: to monitor the performance of business-critical applications. However, while Keynote is specializing in monitoring the quality of end-user experience and performance testing for Web applications from the outside of the corporate firewall, OPNET and dynaTrace provide solutions for monitoring application performance across enterprise infrastructure inside of the firewall.

The general perception of solutions for end-user experience monitoring, such as the one that Keynote is providing, is that they are very effective in identifying when business users are experiencing problems with application performance, but they are not as effective in drilling down into parts of the application delivery chain to isolate and resolve the root cause of the problem. On the other hand, tools for monitoring the performance of internal infrastructure, such as OPNET or dynaTrace, are able to monitor the transaction flow of applications across the network and into the data center, and provide a deeper dive into how applications are performing, what is causing performance problems and how they can be prevented and resolved. TRAC’s recent report “10 Things to Consider When Evaluating End-User Monitoring Solutions” revealed that the ability to integrate tools for monitoring the quality of end-user experience with tools for monitoring enterprise infrastructure is one of the key aspects of having full visibility into application performance. With that said, there is a clear value that end-user organizations can experience when products that include robust capabilities for application performance management (such as OPNET and dynaTrace) get integrated with one of the leading solutions for end-user experience monitoring from outside of the firewall (Keynote).

However, in order to evaluate a true significance of these partnerships, they should be analyzed in the context of some of the key dynamics in this market.

|

|

Written by Bojan Simic |

| August 08, 2010 |

|

In October of 2009, when we launched TRAC Research, we based our approach for covering IT performance management technologies on two advises that we were given by end-users:

- Don’t evaluate products by throwing them into technology buckets, but talk about what these products can do in specific usage scenarios

- Distinguish impactful from “cool” technologies, meaning discover what are the measurable business benefits from deploying a technology solution, not how “hot” the technology is

We thought that the best approach for doing this would be to launch an end-user survey and ask folks that are using this technology what their experiences are. This is when things started to get really messy. Before we even formulated the questions, we conducted close to 150 interviews with end-users, executives of technology vendors, prominent writers and some true thought leaders in this space to make sure that the questions are spot on to what they care about. Just to clarify, none of us are rookies in this space and for me, this is the 18th survey of this type that I’ve created. Although, this time, launching the survey was more “interesting” than usual.

|

|

Written by Bojan Simic |

| May 25, 2010 |

|

One of the emerging trends in IT performance management is that the proliferation of SaaS and cloud computing technologies are changing how organizations go about using and managing IT services. These trends are adding a new dimension to service level and performance monitoring and organizations are increasingly expecting a similar level of flexibility from their management tools as they are getting from their SaaS and cloud deployments. This also opens up new opportunities for management vendors to differentiate themselves from the competition and increase their presence in new markets by acquiring technologies that are well positioned to address new management challenges.

Our recent article highlighted two technology companies that are likely acquisition targets based on their technology, alignment with key market trends and the ability of their solutions to fill in technology and go-to-market gaps that larger vendors currently have. In part two of this series, we are covering two additional companies that meet the same criteria.

Again, this listing is not based on any inside information.

|

|

|